Starting with AWS can be overwhelming, and the main reason for that is the number of services they offer! When you open the All Services tab, you see a long list and no indication of where to start!

So what’s a good strategy to tackle this huge list? Should you go from mandatory to nice-to-have services? From most to least common? By decreasing dependency? The latter option could be a good method, but the problem is that services depend on each other!

Worry no more, because I have an answer! I’ve combined material from course outlines I found online and references from within AWS services. On today’s agenda: IAM, CloudFront, and S3.

Are you ready to start?

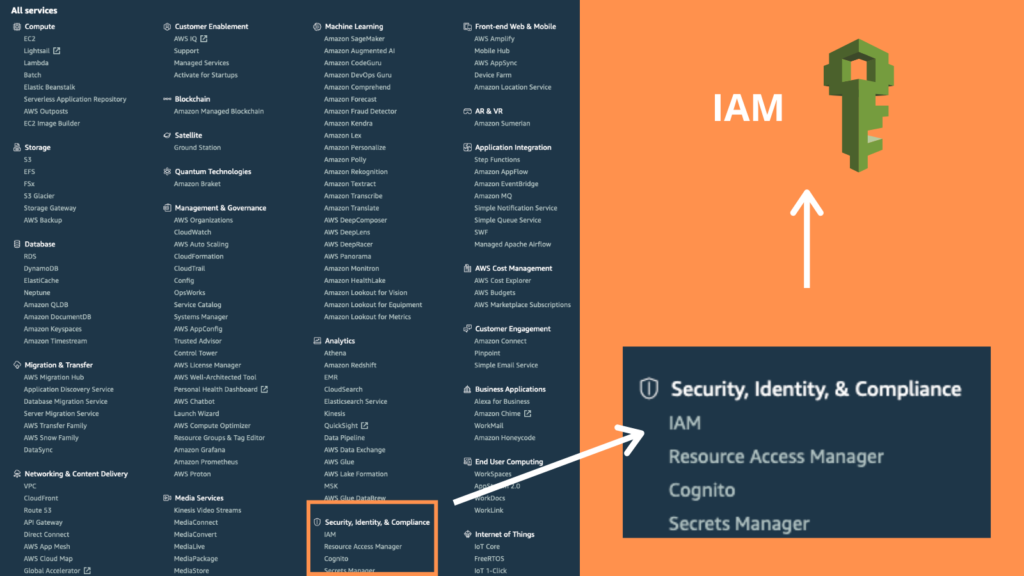

Identity Access Management (IAM)

IAM allows you to give access to AWS services by creating IAM identities: Users, Groups, and Roles. These identities are created globally, and not per region. (I talked about regions in the previous blog post)

You can access the AWS platform in 3 ways:

- Via the AWS console: Logging in to the website with your AWS account, the GUI version.

- Programatically (using the command line), with access key and password: We’ll see an example when we talk about S3.

- Using the SDK (Software Developers Kit): Within your code, calling AWS services using your desired programming language’s API. This is not part of the AWS Cloud Practitioner Certification exam, so it won’t be covered here.

Let’s talk about IAM Service’s basic terms and features:

Security Status

When you go to The AWS Console -> IAM -> Dashboard you will see the Security Status. The goal is to make all these 5 ticks green.

Let’s go over them and see what each one of them means:

1. Delete your root access keys

Ok, before talking about root access keys, what are access keys?

Access keys are long-term credentials for an IAM user or the AWS account root user. You can use access keys to sign programmatic requests to the AWS CLI or AWS API (directly or using the AWS SDK). Access keys consist of two parts: an access key ID and a secret access key. As a user name and password, you must use both together to authenticate your requests.

Root user/account is the email address you signed up with. As a best practice, you shouldn’t use root in the AWS console! The root can do anything, and therefore — you shouldn’t give it to anyone.

2. Activate MFA on your root account

Multi-factor authentication (MFA) is a method in which a user is granted access to a website or application only after successfully presenting two or more pieces of evidence to an authentication mechanism.

For example, when I want to log into my Gmail account from a new computer, I need to both enter my password and then approve on my phone that I am trying to sign in.

3. Create individual IAM users

You manage access in AWS by creating policies and attaching them to IAM identities or AWS resources.

A policy is an object in AWS that, when associated with an identity or resource, defines its permissions.

Policies always come in JSON format. In the IAM policies list, the policies with the orange logo next to them are the ones AWS manages.

The only opportunity to access the secret access key and password of a new user is right after creating it, so you should download it.

4. Use groups to assign permissions

A group is the second type of IAM identity we’ll learn today and is simply a way to store your users and they will inherit all permissions that the group has. For example Administrators, developers, HR (human resources), finances, etc.

Setting up permission in a group is done by applying a policy to that group.

5. Apply an IAM password policy

This is where you decide the rules for the password your users can create: length, characters demand, rotation period, etc.

IAM Credential Reports

A credential report lists all the users in your account, including:

- For each password: enabled or not, when it was last used, when it was changed and when it must be changed.

- For each Access Key:

active or not, when it was last used, when it was last rotated, and what service was it last used on. - MFA: whether MFA has been enabled

You can generate and download a credential report by going to Credential Report on the sidebar menu in the AWS console.

IAM Best Practices

Here are 8 techniques to get maximum security from IAM service:

- Root Access: Only use the root account to create your AWS account. Do not use it to log in.

- Users: One user = One person. Don’t create phantom users.

- Policies to Groups: Always place users in groups and then apply policies to the groups.

- Password Policies: Have a strong password rotation policy.

- MFA: Always enable MFA wherever possible.

- Roles: Use roles to access various other AWS services.

- Access Keys: Use access keys for programmatic access to AWS (access via the CLI or the code).

- IAM Credential Report: Use IAM Credential Reports to audit the permissions of your users/accounts.

And here is the best practices summary for those of us with visual memory:

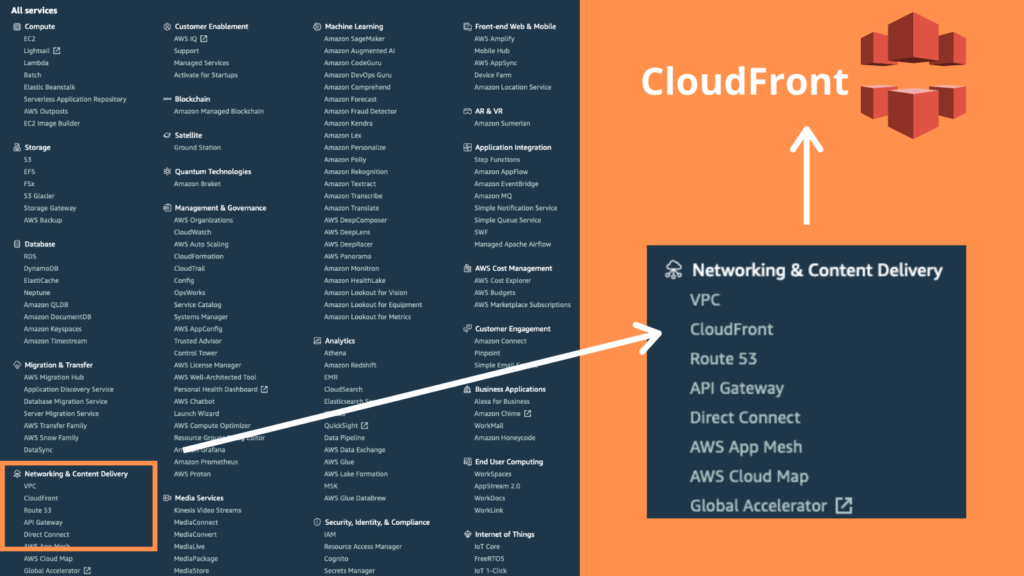

CloudFront

Amazon CloudFront is a fast content delivery network (CDN) service that securely delivers content to customers globally with low latency, high transfer speeds, all within a developer-friendly environment.

A content delivery network (CDN) refers to a geographically distributed group of servers that work together to provide fast delivery of Internet content.

A CDN allows for the quick transfer of assets needed for loading Internet content including HTML pages, javascript files, stylesheets, images, and videos.

As you can see above, without CDN all 3 users need to access the content from the origin. With CDN, only the first user will access the content from the origin and the rest will enjoy a faster delivery because the data is already cached.

But what happens if the cached resource is not up to date? There is a solution for that: TTL = time to live. You define for how long the resource you stored is relevant.

Key Terminology for CloudFront

- Origin is the origin of the files that the CDN will distribute. This can be, for example, an S3 Bucket, an EC2 instance, or an Elastic load balancer. (We will learn what EC2 and Elastic load balancer are in the next blog post. I told you the services are dependent on each other!)

- Edge Location is the location where content will be cached. This is separate from an AWS region/Availability zone.

- Distribution is the name given to the CDN which consists of a collection of edge locations.

How CloudFront Delivers Content to Your Users

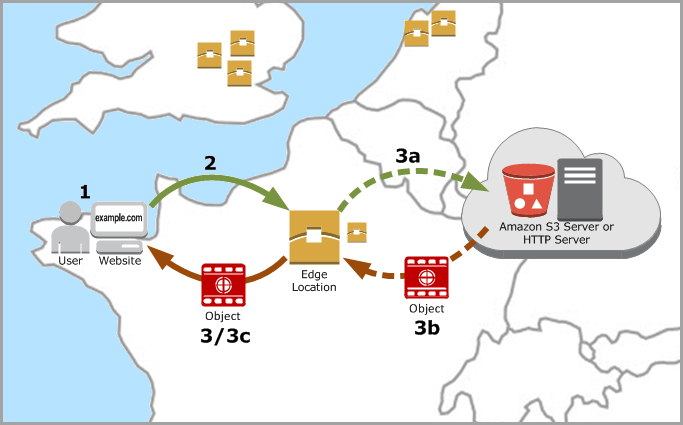

After configuring CloudFront to deliver the content, here’s what happens when users request the files:

1. A user accesses your website or application and requests one or more files, such as an image file and an HTML file.

2. DNS routes the request to the nearest CloudFront POP (Points of Presence: Edge locations and regional mid-tier caches) that can best serve the request and routes the request to that edge location.

3. In the POP, CloudFront checks its cache for the requested files. If the files are in the cache, CloudFront returns them to the user.

If the files are not in the cache, it does the following:

a. CloudFront compares the request with the specifications in your distribution and forwards the request for the files to your origin server for the corresponding file type — for example, to your Amazon S3 bucket for image files and to your HTTP server for HTML files.

b. The origin servers send the files back to the edge location.

c. As soon as the first byte arrives from the origin, CloudFront begins to forward the files to the user. CloudFront also adds the files to the cache in the edge location for the next time someone requests those files.

Amazon CloudFront can be used to deliver an entire website, including dynamic, static, streaming, and interactive content using a global network of edge locations. Requests for content are automatically routed to the nearest edge location, so content is delivered with the best possible performance.

There are two different types of distributions on CloudFront:

- Web distribution — typically used for websites

- RTPM — used for media streaming

Important to know:

- Edge locations are not read-only — you can write to them too, which means you can put an object to them. For example, you can use S3 transfer acceleration (which we will learn about soon!) and that’s using your edge locations to write files up to S3.

- You can clear cached objects but you’ll be charged for doing that.

- Deleting distribution is done first by enabling deletion. This is an additional step to protect you from oopsies.

- When you create a distribution, you will have an option to restrict bucket access. Restrict bucket access means the access from the bucket link is disabled, and that way the only access is through the distribution. Here is an example for a CloudFront URL, so you’ll know how it looks like: hd38q7yr38ih.cloudfront.net/cupofcodelogo.jpg

S3 — Simple Storage Service

Introduction and basic features, storage classes,

What Is S3?

Simple Storage Service (S3) provides secure, durable, and highly scalable object storage. S3 stores and retrieves any amount of data from anywhere on the web. It’s perfect for flat files, which are files that don’t change, such as movies and static HTML pages. The data is spread across multiple devices and facilities.

S3 is Object-based, which means you can upload files (as opposed to other storage architectures like file systems and block storage). The files can be from 0 bytes to 5 TB and are stored in buckets: it’s like a folder in the cloud. You get an HTTP 200 code if the upload was successful.

An example for bucket URL:

https://s3-eu-west-1.amazonaws.com/desktopdir. Because it is creating a DNS entry it must be unique globally.

Basic Features

- Tiered Storage and lifecycle management (which storage tier it goes to)

- Versioning: so you could roll back

- Encryption: Encrypt at rest. Usually, we hear about encryption when transferring data, like with HTTPS. But here, S3 gives you Encryption at rest, which is kind of like keeping your money in a safe in your house.

- Secure your data using Access Control Lists and Bucket Policies: Access Control Lists are on an individual file basis, bucket policies work across an entire bucket.

- Unlimited Storage: S3 has the following guarantees from amazon — You can always* upload a file, and it won’t** get lost.

* Amazon guarantees 99.9% availability.

** Amazon guarantees 99.999999999% durability for S3 information (11X9). it’s very unlikely you are going to lose a file/access to that file.

Data Consistency In S3

- Read after Write consistency for PUTs of new objects: Right after you write a new file you can immediately view the data.

- Eventual Consistency for overwriting PUTs and DELETEs (changes to existing object) (which can take some time to propagate): when you update an existing file or delete a file and then read it immediately, you may get an older version, or you may not.

S3 Transfer Acceleration

Enables fast, easy, and secure transfers of files over long distances between your end-users and an S3 bucket.

Transfer Acceleration takes advantage of Amazon CloudFront’s globally distributed edge locations. As the data arrives at an edge location, data is routed to Amazon S3 over an optimized network path.

Users all around the world want to upload a file to the bucket. When you enable transfer acceleration, those users will actually upload the buckets to the nearest edge location, and the edge location will use Amazon’s internal network and upload it directly to the S3 bucket

Cross-Region Replication For Disaster Recovery

We have a bucket in one region and as soon as a user uploads a file to the bucket, that file will automatically be generated or replicated over to a bucket in another region. It results in a primary and a secondary bucket for disaster recovery.

S3 Versioning

AWS gives you the option to go to the bucket properties and enable bucket versioning. Once it is enabled you can only suspend it, not delete versioning — which means it cannot be disabled!

After enabling the bucket versioning, when you go to the objects table you will see there is a toggle switch named List Versions. Enabling this will add a column called Version ID and expand our list to show all versions of all objects.

For the files you uploaded before enabling versioning, it won’t show any version ID, but from now on, when you upload/update a file, you will see a version ID.

How do you roll the version back? You click on the updated file and delete it. Now you will see in the list only the previous version. Note that S3 stores all versions of an object, even if you delete an object!

Good to know:

- S3 versioning can integrate with life cycle tools: Old versions that are not often used can be stored in another storage class.

- You can use versioning with MFA delete capabilities, so you can minimize the risk of malicious deleting of an object.

Storage Classes

S3 provides Tiered Storage, which are 6 classes of storage that provide different advantages. S3 also provides Lifecycle Management, which gives you the ability to decide which storage tier your file goes to and when.

- S3 Standard: good availability and durability, stored redundantly across multiple devices in multiple facilities, and is designed to sustain the loss of 2 facilities concurrently.

- S3 IA (Infrequently Accessed): For data that is accessed less frequently, but requires rapid access when needed. Lower fee than standard, but you are charged a retrieval fee.

- S3 One Zone IA: Ideal when you want a lower-cost option for infrequently accessed data, but do not require the multiple AZ data resilience.

- S3 Intelligent Tiering: Designed to optimize costs by automatically moving data to the most cost-effective access tier, without performance impact or operational overhead.

- S3 Glacier: A secure, durable, and low-cost storage class for data archiving. You can reliably store any amount of data at costs that are competitive with or cheaper than on-premises solutions. Retrieval times configure from minutes to hours.

- S3 Glacier Deep Archive: S3’s lowest-cost storage class where a retrieval time of 12 hours is acceptable.

And here is a more detailed comparison:

You are charged for S3 in the following ways:

- storage

- requests

- storage management pricing

- data transfer pricing

- transfer acceleration

- cross-region replication pricing

Wow wow wow, that was a lot! We need a meme break.

Lastly, we will see a hands-on example of creating an S3 bucket and building a website in S3. You can follow along or just read my actions. This will teach us in more depth about the existing S3 features and actions.

Creating an S3 Bucket

First, notice that when you go to S3 in the AWS console — the region changed to global. The bucket is viewed globally, but it’s stored in individual regions.

Your buckets are always a web address and should be DNS compliant.

DNS compliance means that it’s a valid web address: one that doesn’t contain upper-case letters, underscores, etc.

You will see an option to block public access, which ensures that our buckets are always private, so you need to uncheck that box.

The bucket has a few sections:

- Properties: contains actions like adding static website hosting and enable transfer acceleration.

- Permissions, where you canmanage public access settings.

- Management: life cycle, replication, analytics, Metrics, inventory.

Uploading a file

Uploading a file will return HTTP 200(OK) response.

Files are stored in a key-value pair:

- Key is the name of the object

- Value is the data that is made up of a sequence of bytes.

- Version ID

- Metadata (data about data you are storing)

- Subresources: Access control lists, torrent

Click on the bucket name, and click the upload button and upload a file. After uploading, if you click on the file and then on the Link, you will get an error message: access denied, and that is because the file is not public.

By default, anything you put into S3 is not public. How to make it public? Click on the file->actions->make public.

another way is to click on the permissions->public access->everyone

Clicking on a specific file -> properties, you will see storage class, Encryption, metadata, tags, and object lock.

You can change your storage class on demand and it doesn’t require any boot.

Restricting Bucket Access:

- Bucket Policies — Applies across the whole bucket

- Object Policies — Applies to individual files

- IAM Policies to Users & Groups — Applies to Users & Groups.

Creating A Website On S3

You can use S3 to host static websites ( something.html). Websites that require a DB connection cannot be hosted on S3.

S3 scales automatically to meet your demand. Many enterprises will put static websites on S3 when they think there is going to be a large number of requests (such as for a movie preview).

Creating a website on S3 is done by:

- Uploading

index.htmlanderror.htmlfiles into the bucket and giving them public access - Enabling the bucket to become a website

Let’s dive deep:

1. Uploading HTML files into the bucket and giving them public access

When you create the bucket with all the default settings — it will block all public access, but we want our HTML files to be public. This is done by clicking on the file -> actions -> make public.

This action is blocked initially, because of the bucket settings. In order to unblock this action, we’ll go to our bucket permissions and edit it to enable public access. Now clicking on the file -> actions -> make public will work.

Enabling access per file is not the best practice in this case. We want the whole bucket to be public, and that’s done by bucket policy. you can modify the policy by going to permissions-> bucket policy ->edit.

Here is an example for a policy: Let’s assume my bucket name is BucketOfCode

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::BucketOfCode/*"

]

}

]

}

S3 is extra safe, so in order to make an entire S3 bucket public you need to: untick the Block public access boxes and then use the bucket policy.

2. Enabling the bucket to become a website

Two public HTML files are not a website!!! In order for it to be, we go to the bucket -> properties->static website hosting->edit and here we are actually requested to provide index document and error document.

That’s it! And to finish with a smile, here is a precious video I found on YouTube:

“If you’re planning to use S3

Encrypt at rest

Rotate your keys

MFA your IAMs

Use SDKs for hiding them, and

Minimize your ACL

Password strength on overkill

And if configured properly

Buckets never leak”

Pop quiz! Which of these lyrics are IAM practices and which are S3 practices?

Wow, we learned a lot today! We started with IAM and its best practices, continued with CloudFront and CDN, and finished with the wondrous world of S3. It’s not so scary anymore, right?

I hope you enjoyed reading this article and learned something new! Want to learn more? In the previous blog post, we talked about Cloud Computing and global AWS infrastructure, and in the next blog post, we will talk about more AWS services. Stay tuned!

If you read this, you’ve reached the end of the blog post, and I would love to hear your thoughts! Was I helpful? Do you also use AWS at work? Here are the ways to contact me:

Facebook: https://www.facebook.com/cupofcode.blog/

Instagram: https://www.instagram.com/cupofcode.blog/

Email: cupofcode.blog@gmail.com